A collaborative self-study approach, including co-researcher focus groups (“Debrief”), is employed to provide the opportunity for guided reflection on the experience. Additionally, the Debrief is a qualitative evaluation technique that can be used to capture faculty members’ perceptions of the efficacy of using institutional rubrics as part of the action research SAIL Planning Cycle.

The Debrief is scheduled for 90 to 120 minutes, depending on group size, and engages faculty co-researchers in a reflective assessment of the SAIL process, and overarching Research Questions that ask about the efficacy and utility of the institutional rubrics and results. In addition, the Debrief gathers feedback on the alignment of the project with the six principles for learning outcomes assessment. Information gathered during the Debrief and from the written feedback during the assessment of learning process, is used to inform recommendations for future action research cycles.

Semi-Structured Debrief Prompts

The Debrief Prompts (listed below) are organized according to three themes aimed to address the two research questions and whether the SAIL method followed a principles-focused approach. The semi-structured prompts are intended to guide the conversation; however, space is created for dialogue to flow in the direction that faculty members deem important. The Faculty Debrief Questionnaire (PDF) for conducting the Debrief focus group is also available for easy download.

Focus Groups: Semi-Structured Debrief Prompts

Opening

How was your overall experience of the process, the rubric, the assessing, and the course-specific report?

Potential Prompts:

- In what ways were they useful?

- In what ways were they not useful?

- Was the course-specific report readable/clear?

- What are insights from the results for improving your course going forward?

- What are insights from the process for improving your course going forward?

Theme 1: Efficacy of institutional rubrics for assessing and demonstrating the degree of student achievement of ILOs in ILO-approved courses

- How effective were ILO rubrics for understanding student achievement of the ILO?

- How about at the program and course level?In what ways was the rubric effective?

- In what ways was the rubric not effective?

Theme 2: Utility of process for informing curriculum and learning planning and practices to continuously improve student learning

How useful are the results that you/faculty received as part of the pilot project for informing curriculum changes?

- How feasible is embedding institutional rubrics in ILO-approved courses? Prompt for perceptions related to their usefulness, meaning, integrity, and adaptability?

- What is the likelihood of colleagues/other faculty members adopting the institutional rubrics?

- What is the scalability of this process?

- What would help with sustainability (e.g., ongoing community, consistent interface)?

- Next steps and future considerations:

- What to keep doing?

- What to try next?

Theme 3: Alignment with Principles for Learning Outcomes and Assessment

3.1. Equitable and Learner-centred

- How well did the process and rubric reflect (and represent) the diversity of student learning?

- Does the rubric privilege one or more ways of knowing?

3.2. Growth and Learning-oriented

- How can we maintain a growth focus?

- How can we maintain an environment in which faculty feel safe, with a focus on formative improvement for learning?

- How can we be transparent with students?

3.3. Purposeful and Holistic Design

- Did the rubrics feel like they reflected the ILO you were assessing?

- How well did the process and rubric reflect authentic assessment?

- How can we best assess teams-based learning going forward?

3.4. Ongoing Cyclical Improvement

- How credible did the rubric feel? How credible did the process feel? Any concerns about the process?

- How could the process be made more sustainable?

- Did the process feel clear, transparent, and collegial?

- Are rubrics a viable approach?

3.5. Faculty-designed for Learning

- How well did the assessment approach reflect the work and knowledge of your/the discipline?

- How well did the assessment approach align with existing governance structures and faculty-led teaching and learning?

- What was it like having a colleague evaluate your students’ work? Would it be the better/same/worse if you assessed the students? Why? Would the rubrics be relevant to grading? Or would it be best to keep separate?

- What would collegial reporting and sharing look like?

3.6. Reflexive Approach to Learning

- How useful is this process for intentionally reviewing and using assessment data to inform teaching and learning changes?

- How supportive is this process for continuous improvement through creative inquiry and curiosity?

- Are the results received as part of the pilot project process useful for informing curriculum changes?

Interpretation of Faculty Responses and Creation of Final Report

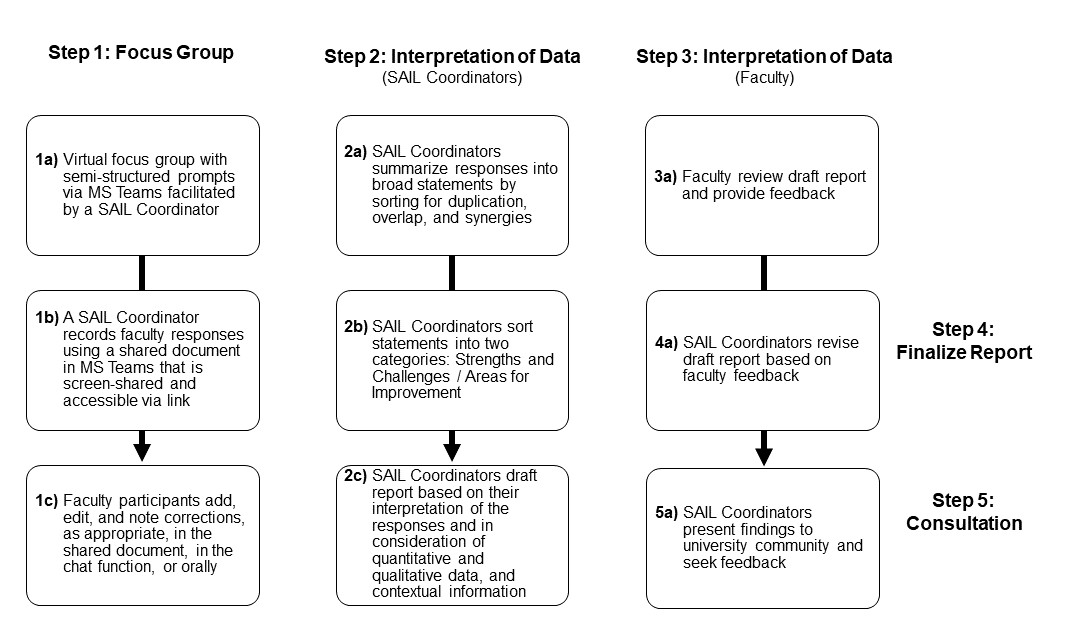

The Debrief is facilitated by a SAIL Coordinator. During Pilot #1, we hosted a joint Debrief that was inclusive of all ILO Pod members. During Pilot #2, we hosted a separate Debrief for each ILO Pod due to difficulties finding a mutually available time. During the Debrief, one of the SAIL Coordinators recorded the faculty responses in a shared document. Figure 3 graphically depicts the methodology used for interpreting the faculty responses gathered during the Debrief as well as how the data contributes to the creation of a SAIL Pilot Final Report.

Following the Debrief, the SAIL Coordinators collaboratively reviewed the recorded responses and completed the following steps to interpret the data collected:

- organized responses by the three themes described in the Debrief questionnaire;

- summarized responses into broad statements by sorting for duplication, overlap, and synergies;

- sorted statements into 1) Strengths and 2) Challenges/Areas for Improvement;

- incorporated quantitative analysis of student consent rates, descriptive assessor ratings, and relevant contextual information that emerged during the pilot (e.g., internal and external environmental factors such as the global pandemic, participation rates of faculty in ILO Pod activities, time delays);

- drafted a summary report, including recommendations based on the SAIL Coordinators’ interpretation of the faculty responses in the context of the additional quantitative and qualitative data, and environmental factors;

- shared, via email, the summary report with faculty and provided an opportunity for faculty to edit, comment, and clarify findings using track changes;

- revised the report based on faculty feedback; and,

- initiated the Consultation phase.

See Institutional Consultation and Reporting for more details regarding the Consultation phase, including a description of the knowledge dissemination channels at the course, departmental, and institutional level.